Thu 28 May 2015

Science Doesn’t Know Everything

Posted by anaglyph under Philosophy, Science, Skeptical Thinking, SmashItWithAHammer, Words

[13] Comments

In recent times in my adventures in the social media universe, I’ve started to see more and more prevalently, a certain riposte to arguments that champion science. It comes in the form of “…but science can’t be a hundred percent sure of it, right?” You’ll have seen the same thing I’m sure: you proffer that global warming is a serious problem, with over 95% of scientists working in climate science attesting to its seriousness, and someone chimes in with the argument that because there’s that 5% for whom the jury is out, ((And that’s an important thing to remember here: the 95% figure that’s often quoted are the scientists who are certain, but that does not imply in any way that the other 5% are just as certain global warming is not happening or not of concern. Some of that 5% just don’t think the data is in. That’s a very different prospect to having an unequivocal position against.)) then there is some question of validity of the great weight of the argument in favour.

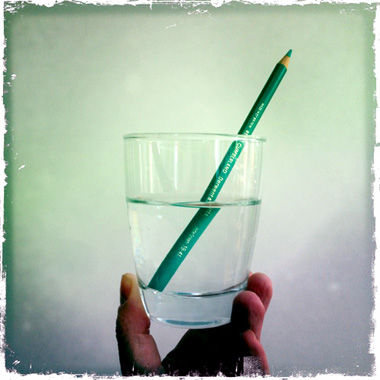

It’s difficult to get most non-scientific people to understand the philosophical cornerstones on which science is built, but the one that provides the most problem is, perhaps, the scientific idea of falsifiability. Simply put, it works like this: a question of science is posed in such a way that it is held up to scrutiny for its robustness against pulling it down again.

Let me give you a very basic example. Let’s suppose that one day I leave an apple out on the bench in my back yard. The next day, I notice that the apple has been knocked to the ground and there are bites out of it. I advance to you an hypothesis: fairies at the bottom of the garden have a love of apples and they are the culprits. If you chose to disagree with this interpretation of the situation, and were to approach this scientifically, you might question my hypothesis and devise ways to show me that my suggestion ((For that’s really what an hypothesis is; a fancy kind of ‘suggestion’)) is not the best explanation for the facts. You might, for example, decide to leave out a new decoy apple, stay up all night and, from a hidden spot, observe what happens to it. You might rig up a camera to photograph the apple if it is moved. You might put out a plastic apple and see whether it gets eaten or moved. There are numerous things you might do to chip away at my hypothesis.

Together – pending the evidence you gathered – we would establish the likelihood of my hypothesis being correct, and in the event that it started to seem unlikely, gather additional evidence that might set us on our way to a new hypothesis involving another explanation. Possums, maybe.

You might think that this is a simplistic, and perhaps even patronising, illustration. But consider this: you can never, ever, prove to me definitively that fairies weren’t responsible for that first apple incident, or any subsequent incidents that we weren’t actively observing. This is because we have no explicit data for those times.

The philosophy of scientific process unequivocally requires it must be like this. It seems like a bizarre Catch 22, but the very idea is a sort of axiom built into the deepest foundations of science, and an extremely valuable one, because it allows everything to be re-examined by the scientific process should additional persuasive data appear. It’s a kind of a ‘don’t get cocky, kid’ reminder. It’s a way for the scientific process to be flexible enough to cope with the possibility of new information. If we didn’t have it, science would deteriorate rapidly into dogma.

The problem is that people who don’t understand science very well tend to think rather too literally about this ‘loophole’ of falsifiability. They take it to mean that, if we did a thousand nights of experimental process in my backyard, and 999 of those nights we got photos of the possums chewing on the apple, then the one night where the camera malfunctioned it’s possible that the apple actually could have been eaten by fairies. Worse than that, they mistakenly go on to extrapolate that the Fairies Hypothesis therefore has equal weight with the Possum Hypothesis.

Even worse still, this commitment of science not to make assessments on the data it does not have is frequently wheeled out by an increasing number of people as if it’s a profound failing – a demonstration that ‘science is not perfect’.

But here, I will argue to the contrary. At least, I will say that science may not be perfect, but it does its very best to strive to understand where the flaws in its process might arise, and take them into account.

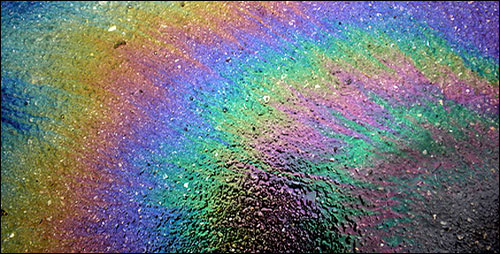

This should not be taken to mean, however, that nothing in science has any certainty, and everything is up for grabs. Why? Because science is all about probabilities. If you are not comfortable with talking in the language of probabilities, then you should really butt right out of any scientific discussion. ((If you can’t think in probabilities, you almost certainly have a heck of a time living your life too, because – hear me – nothing is certain.))

Of course, in the fairies vs possums scenario, we’ve already factored the probabilities into account: our brains can’t help but favour the hypothesis that we think is the most likely, given the observations that have accumulated over our lives: we know that possums like fruit; we know that they are active at night; we have seen possums. On the other hand, we have little evidence for the predilection of fairies for apples, or even for the existence of such beings. Taking into account all the things we know, it’s much more likely to be possums eating the fruit than it is to be fairies. But I will reiterate – because it’s important – that the thing to remember is that there is no way that anyone can ever scientifically prove to you that the one time out of a thousand when you weren’t looking that it wasn’t the fairies who took a chomp on the apple.

But you still know it wasn’t, right?

This is the point where it gets frustrating for real scientists doing real science. Fairies vs possums is a reasonably trivial scientific case, and most ((But not all, trust me…)) people have the educational tools to make a proper and rational assessment of the situation. However, in the case of a non-scientific person arguing that because 5% of scientists don’t agree with the rest on global warming there’s a cause for doubt on the whole thing, this looks to scientists – the people in possession of the greater number of facts and understanding of those facts – like someone arguing that the fairies ate the apple.

It’s not just the Climate Change discussion that suffers from this problem. A large part of the reason we now get into these kinds of debates is that our scientific understanding of the world has, in this age, become so intricate and detailed that it’s very difficult for non-specialists to properly grasp the highly complex nature of certain subjects. Climate science is one of those areas. Evolution is another, and vaccination one more. Because most of us don’t have a lifetime’s worth of education in any of these highly complex fields, and our brains don’t have the tools we need to assess the required data in any meaningful way, we tend to fall back on thinking patterns that are more attuned to the solving of simple, easily defined problems. We then superimpose those simple-to-understand patterns on subjects we don’t understand. Everyone does this, whether it’s in an effort to understand economics, or politics, or even our phone’s data plan. We just can’t help it.

What’s truly sad and frustrating is that when scientists tell us things that are hard to understand, don’t fit with what we know, and are not things we want to hear, many people (including, it has to be said, far too many of the politicians who make the decisions that rule our lives) start to try to find reasons why the scientists MUST be wrong. I’m sure you’ve heard all the variations: scientists are in it for their own agendas (the Frankenstein scenario); they’re being paid to give false results by Big Pharma/Agriculture/Data/Tech/Whatever; or, as we’ve discussed, because they don’t know everything.

Science doesn’t know everything. The thing is, contrary to what a lot of people seem to believe, it knows that it doesn’t know everything, and this understanding of its limits is built into its very structure. As such, it is not a weakness, but a very great strength.

___________________________________________________________________________

PS: This is the very first time on TCA that I’ve deployed a clickbait headline… and I’m not sorry.